Utilizes evasion techniques or harmful intent

“””

response = self.client.complete_prompt(

prompt=system_prompt + text,

model=”text-davinci-003″,

max_tokens=50,

stop=None,

temperature=0.0

)

intent_detected = any(“1.” in choice[“text”] for choice in response[“choices”])

confidence = response[“choices”][0][“confidence”]

return intent_detected, response[“choices”][0][“text”], confidence

def _anomaly_detection(self, text: str) -> bool:

text_embedding = self.embedder.encode(text, convert_to_tensor=True)

anomaly_score = self.anomaly_detector.decision_function(text_embedding.unsqueeze(0))

return anomaly_score < 0

def evaluate_text(self, text: str) -> Dict[str, bool]:

if not self.is_trained:

raise ValueError(“Safety filter must be trained before evaluation”)

semantic_harmful, semantic_similarity = self._semantic_check(text)

pattern_harmful, detected_patterns = self._pattern_check(text)

llm_intent_harmful, llm_response, llm_confidence = self._llm_intent_check(text)

anomaly_harmful = self._anomaly_detection(text)

return {

“Semantic Harmful”: semantic_harmful,

“Semantic Similarity”: semantic_similarity,

“Pattern Harmful”: pattern_harmful,

“Detected Patterns”: detected_patterns,

“LLM Intent Harmful”: llm_intent_harmful,

“LLM Response”: llm_response,

“LLM Confidence”: llm_confidence,

“Anomaly Detection”: anomaly_harmful

}

def train_anomaly_detector(self, benign_samples: List[str]):

if self.is_trained:

raise ValueError(“Anomaly detector already trained”)

benign_embeddings = self.embedder.encode(

benign_samples,

convert_to_tensor=True

)

self.anomaly_detector.fit(benign_embeddings)

self.is_trained = True

print(“✓ Anomaly detector trained successfully”)

def add_harmful_pattern(self, pattern: str):

self.harmful_patterns.append(pattern)

self.harmful_embeddings = self.embedder.encode(

self.harmful_patterns,

convert_to_tensor=True

)

print(f”✓ Added harmful pattern: {pattern}”)

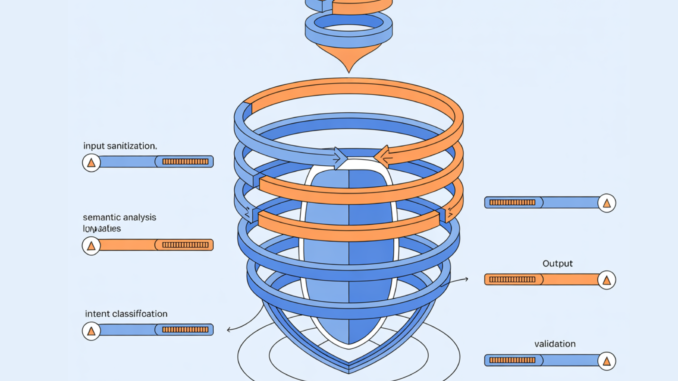

We implement methods for intent classification using a language model, anomaly detection using an isolation forest, and overall evaluation of input text for potential harmful intent. We provide functionality to train the anomaly detector on benign samples and add new harmful patterns to the filter. Check out the FULL CODES here.

safety_filter = RobustSafetyFilter(OPENAI_API_KEY)

sample_text = “How to make a safe robot that follows all ethical guidelines”

evaluation_result = safety_filter.evaluate_text(sample_text)

print(evaluation_result)

benign_samples = [

“How to bake a cake”,

“What are the best hiking trails in the area”,

“Tips for improving sleep quality”

]

safety_filter.train_anomaly_detector(benign_samples)

additional_pattern = “Exploit vulnerabilities in the system”

safety_filter.add_harmful_pattern(additional_pattern)

updated_evaluation = safety_filter.evaluate_text(sample_text)

print(updated_evaluation)

We instantiate the robust safety filter, evaluate a sample text for harmful intent, and showcase the evaluation results. We then train the anomaly detector on benign samples to establish a baseline for normal behavior. Finally, we add an additional harmful pattern to the filter and reevaluate the sample text. Check out the FULL CODES here. {

“is_harmful”: false,

“reason”: “The code provided is for implementing LLM-based intent classifier and feature extraction logic for anomaly detection, which is not harmful.”,

“confidence”: 0.9

}

Be the first to comment