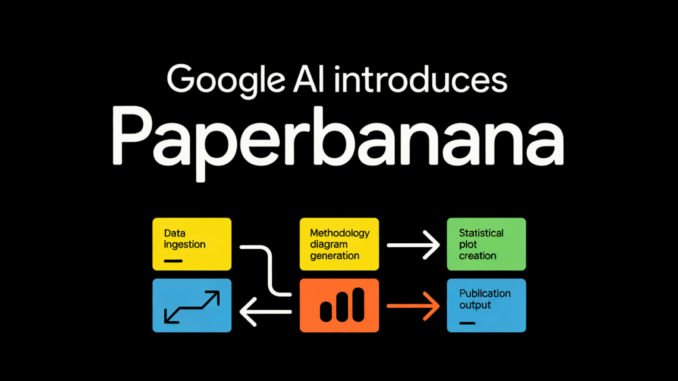

Creating visually appealing illustrations for publications can be a time-consuming process in the research workflow. While AI scientists are proficient in literature reviews and coding, they often face challenges in effectively communicating complex discoveries visually. A collaborative research team from Google and Peking University has introduced a new framework called ‘PaperBanana’ that aims to address this issue by utilizing a multi-agent system to automate the creation of high-quality academic diagrams and plots.

5 Specialized Agents: The Architecture

The ‘PaperBanana’ framework does not rely on a single prompt. Instead, it employs a team of 5 agents to collaboratively transform raw text into professional visual representations.

Phase 1: Linear Planning

Retriever Agent: This agent identifies the 10 most relevant reference examples from a database to guide the style and structure of the visuals.

Planner Agent: Translates technical methodology text into a detailed textual description of the target figure.

Stylist Agent: Functions as a design consultant to ensure that the output aligns with the “NeurIPS Look” by using specific color palettes and layouts.

Phase 2: Iterative Refinement

Visualizer Agent: This agent transforms the textual description into a visual output. For diagrams, it utilizes image models like Nano-Banana-Pro, while for statistical plots, it generates executable Python Matplotlib code.

Critic Agent: Inspects the generated image against the source text to identify factual errors or visual inconsistencies. It provides feedback for 3 rounds of refinement.

Outperforming the NeurIPS 2025 Benchmark

The research team has introduced PaperBananaBench, a dataset comprising 292 test cases sourced from actual NeurIPS 2025 publications. By employing a VLM-as-a-Judge approach, they compared the performance of PaperBanana against leading baseline methods.

The system excels in producing ‘Agent & Reasoning’ diagrams, achieving an overall score of 69.9%. Additionally, it offers an automated ‘Aesthetic Guideline’ that favors ‘Soft Tech Pastels’ over harsh primary colors.

Statistical Plots: Code vs. Image

Statistical plots require a level of numerical precision that standard image models may lack. PaperBanana addresses this by having the Visualizer Agent generate code instead of visual pixels.

Image Generation: While excelling in aesthetics, image generation may suffer from ‘numerical hallucinations’ or repeated elements.

Code-Based Generation: By utilizing the Matplotlib library to render plots, this approach ensures 100% data fidelity.

Domain-Specific Aesthetic Preferences in AI Research

As per the PaperBanana style guide, aesthetic choices may vary based on the research domain to align with the expectations of different academic communities.

Comparison of Visualization Paradigms

When it comes to statistical plots, there is a notable trade-off between utilizing an image generation model (IMG) versus generating executable code (Coding).

Key Takeaways

Multi-Agent Collaborative Framework: ‘PaperBanana’ utilizes 5 specialized agents—Retriever, Planner, Stylist, Visualizer, and Critic—to transform technical text into high-quality methodology diagrams and plots.

Dual-Phase Generation Process: The workflow involves a Linear Planning Phase for reference retrieval and aesthetic guideline setting, followed by a 3-round Iterative Refinement Loop where errors are identified and corrected for enhanced accuracy.

Superior Performance on PaperBananaBench: Evaluation against 292 test cases from NeurIPS 2025 showcases that the framework outperforms conventional baseline methods in Overall Score (+17.0%), Conciseness (+37.2%), Readability (+12.9%), and Aesthetics (+6.6%).

Precision-Focused Statistical Plots: The system transitions from image generation to executable Python Matplotlib code for statistical data, ensuring numerical precision and eliminating common issues like “hallucinations” found in standard AI image generators.

For more information, you can refer to the original Paper and Repo. Feel free to follow us on Twitter and join our ML SubReddit. Additionally, subscribe to our Newsletter and join us on Telegram for more updates.

Be the first to comment